Nemotron-70B: Nvidia’s Latest Breakthrough in Open Weights AI Models

By Horay AI Team|

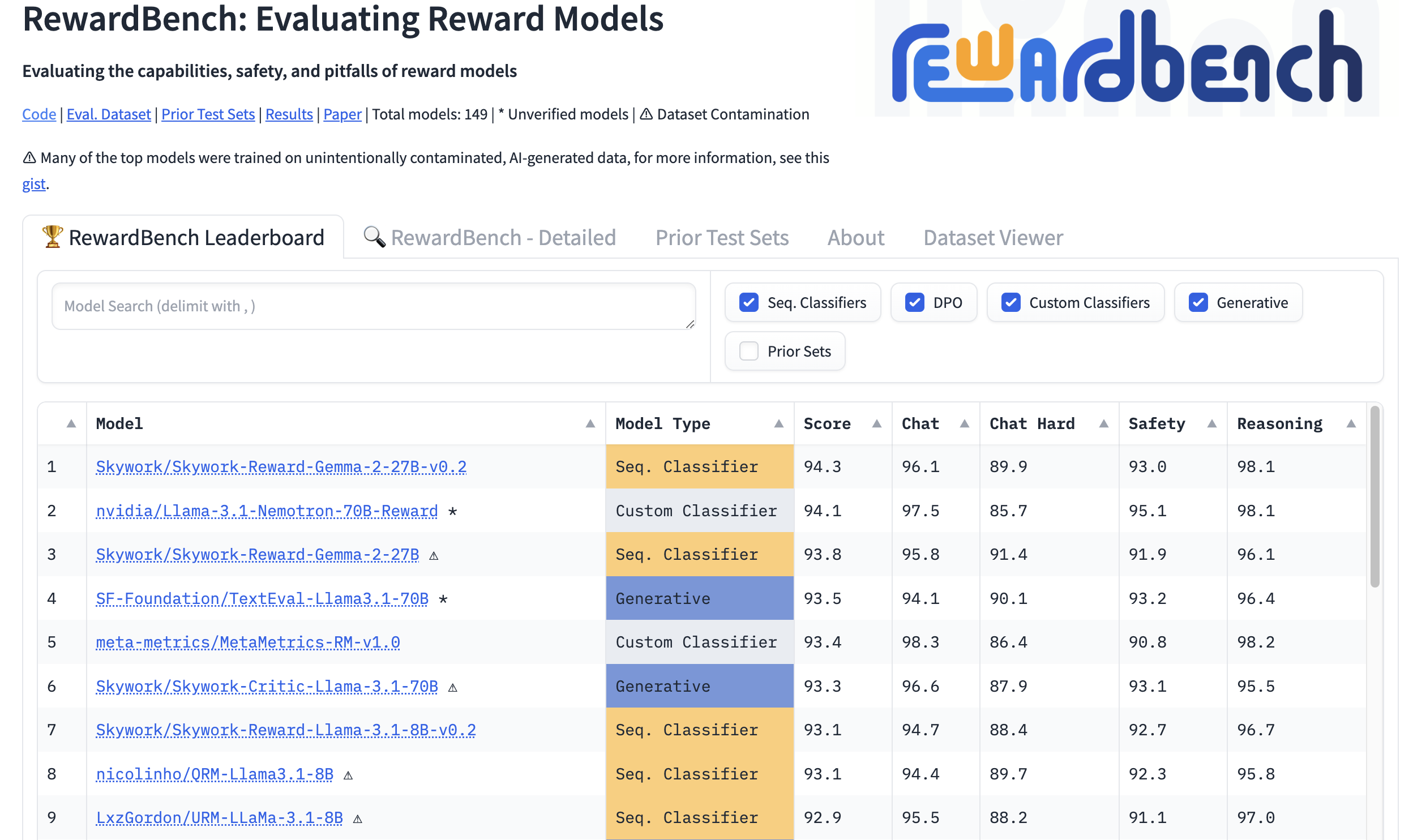

In mid-October 2024, Nvidia unveiled its latest large language model (LLM), Llamma-3.1-Nemotron-70B, which has quickly garnered significant attention in the AI community with its incredible performance. Within hours of its release, Nemotron-70B was hailed as “A Historic Release for Open Weights Models”, quickly surpassing established models like Claude-3.5 and GPT-4o on the Leaderboard, and landing in the third spot on AI model leaderboards, just behind GPT-o1.

In this blog post, we'll delve into the impressive capabilities of Nemotron-70B, explore its features and advantages, and take a closer look at Nvidia’s newly developed HelpSteer2-Preference Reward Model that supports this groundbreaking LLM.

Overview of the Surprising Nemotron-70B compared to Claude and GPT

This recent YouTube video has highlighted how Nemotron-70B performs in comparison to both Claude-3.5 and GPT-4o, which were previously among the top-performing models in the LLM community. The comparison evaluated the models across a wide variety of tasks, ranging from creative writing to logical reasoning.

In some cases, the AI-generated outputs from Claude-3.5 and GPT-4o could be detected as exactly AI-generated, even when specifically prompted to appear more human-like. In contrast, Nemotron-70B consistently delivered more humanized and natural-feeling outputs. Furthermore, because it has fewer content restrictions than its competitors, it enables users to explore a wider range of applications without the same level of limitations imposed by proprietary models.

On top of that, the model's ability to access and incorporate up-to-date information from the internet is another major strength, setting it apart from models like GPT-4o, which operates in more closed environments. This connectivity enhances Nemotron-70B’s practicality for real-time use cases, such as market analysis and news summarization.

HelpSteer2 Dataset: A brand new Attempt

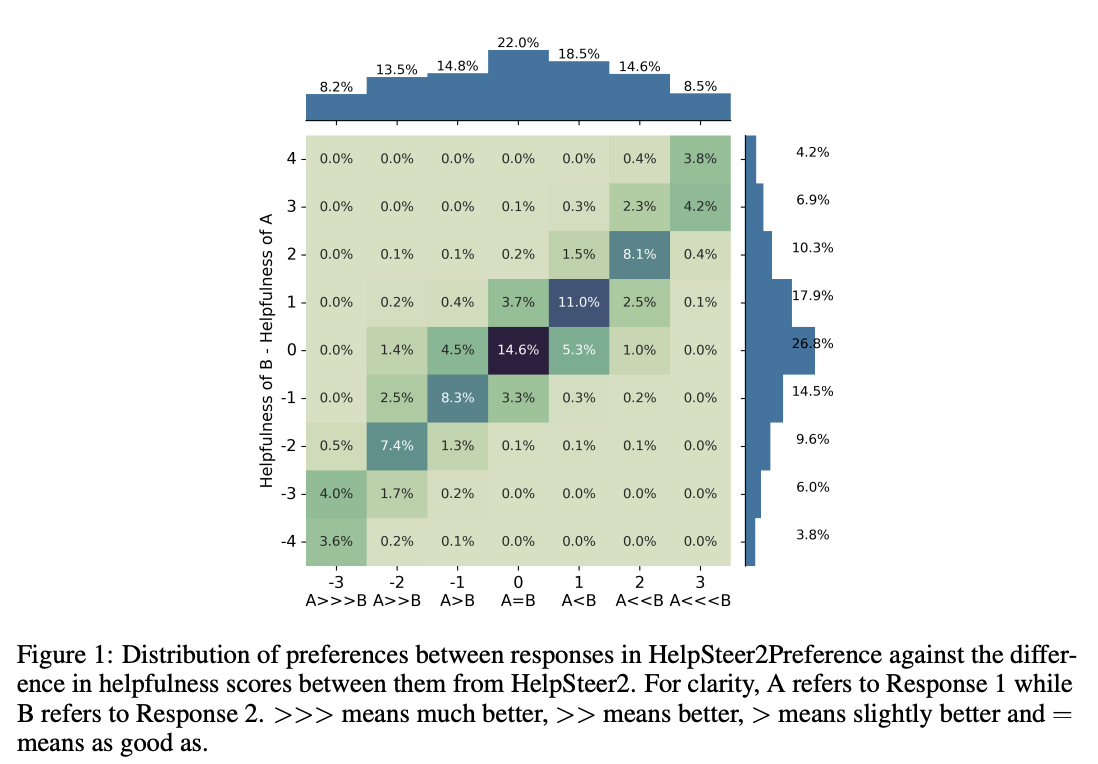

At the heart of Nemotron-70B's high performance is Nvidia’s HelpSteer2 Dataset, which plays a crucial role in optimizing the model’s outputs. This dataset is developed using a combination of techniques from reward models of both Bradley-Terry style and Regression style, which are commonly used for model training.

But here’s the catch—there hasn’t been clear proof that one approach is better than the other when given the same data. As each method collects data in different formats, and these formats can not always play well together. As a result, finding datasets that match both approaches equally has been a challenge.

To solve this, Nvidia has taken a step forward with their HelpSteer2 dataset, which now includes preference annotations designed specifically for Bradley-Terry training. These annotations successfully complement the existing Regression-style ratings, giving developers the first opportunity to compare both models head-to-head on properly matched data. To make things even better, the preference annotations come with human-written justifications, making the data easier to understand and interpret.

With this enriched dataset, Nvidia has been able to run the first real comparison between Bradley-Terry and Regression models, giving deeper insights into how each performs when given equal footing. HelpSteer2 Dataset has then allowed Nvidia to create a reward model that can effectively guide the Nemotron-70B to generate outputs aligned with human preferences and expectations. It's worth noting that Nvdia has meanwhile open-sourced this dataset, taking developers one step closer to even more accurate AI interactions. The dataset successfully scored 94.1 at the second place on RewardBench until now.

Advantages of Nemotron-70B

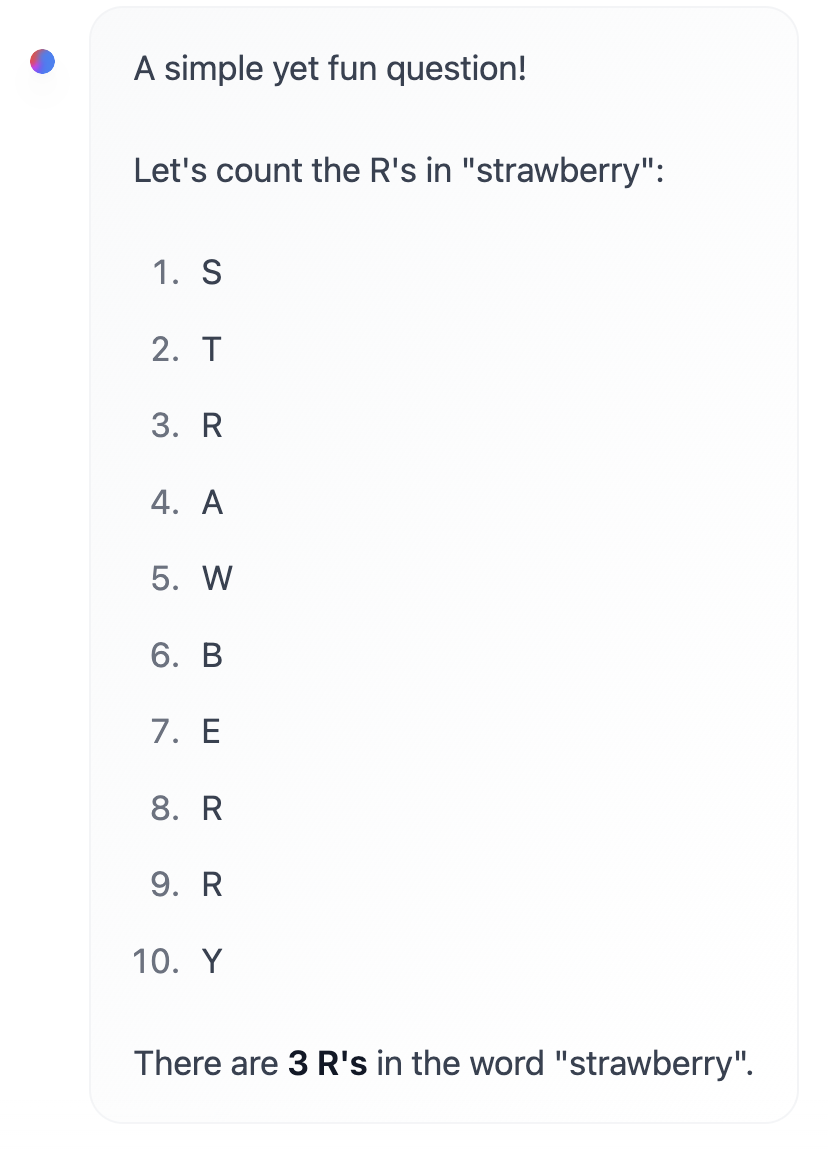

- 1. Contextual Understanding Nemotron-70B has got massive 70-billion-parameter architecture, which is the key to its unparalleled contextual understanding. This linguistic powerhouse allows it to capture nuances that often elude lesser models, including idioms and figurative language. Whether it's understanding implied meaning, or recognizing context-dependent expressions, Nemotron-70B's advanced architecture ensures that it consistently delivers accurate and relevant responses. For example, Nemotron-70B is completely able to answer "How many R in strawberry?"

- 2. Lightning-Fast Response Generation Speed and responsiveness are vital in today's fast-paced digital society. Leveraging Nvidia's proprietary GPU acceleration, this model generates responses in near real-time, creating an almost seamless interaction experience. This lightning-fast responsiveness is a game-changer for applications requiring swift, human-like interactions, such as chatbots, virtual assistants, and live support systems.

- 3. Multilingual Mastery In an increasingly globalized world, Nemotron-70B breaks down language barriers with its support for over 30 languages, enabling effortless communication across linguistic and cultural boundaries. This multilingual mastery is a great opportunity for global applications, from international customer support platforms, to cross-lingual knowledge sharing initiatives.

- 4. Emotional Intelligence & Empathy Trained on a diverse range of emotional datasets, this model can recognize and respond with empathy, making it an invaluable asset for sensitive applications. Whether it's providing support in mental health platforms, or simply showing understanding in customer service interactions, Nemotron-70B's emotional intelligence is able to enhance the user experience, fostering deeper, more meaningful connections.

- 5. State-of-the-Art Conversational Flow Engaging in a conversation with Nemotron-70B is similar to interacting with a highly articulate and insightful individual. This model's responses are contextually relevant, coherent, and logically consistent, simulating human-like discussions with uncanny accuracy. This capability is perfect for applications where user engagement is paramount, from interactive storytelling and educational platforms to sophisticated chatbots.

Getting started with Nemotron-70B

Hugging Face: Nemotron-70B is available on Hugging Face, making it easy to integrate into individuals' own projects.

Github: The model is also available on GitHub, where Nvidia has provided open-source code and documentation.

Nvidia API Catalogue: For enterprises and developers looking for more robust integration options, Nvidia offers access to Nemotron-70B through its API catalogue.

Conlusion

In conclusion, Nvidia's Nemotron-70B represents a groundbreaking leap in Open Sourced AI Models, outperforming previous top contenders like Claude-3.5 and GPT-4o. Nvidia has also open-sourced a high-quality preference modeling dataset, the HelpSteer2, which is likely the first open-source version of a general-domain preference dataset to include human-written preference rationales. At the same time, with its diverse amount of advantages, Nemotron-70B is poised to revolutionize various applications, from chatbots and virtual assistants to educational platforms and even further. Thus, as the model is now readily available on a lot of platforms, developers and enterprises can swiftly access and integrate this linguistic powerhouse into their projects, unlocking new frontiers in human-AI interaction.

Frequently Asked Questions (FAQs)

- Q: Is Llama-3.1-Nemotron-70B-Instruct free to use?A: Yes, the model is currently available for free, with terms of use applying.

- Q: Can I use this model for commercial purposes?A: Yes, it is ready for commercial use, but ensure compliance with Nvidia's terms and industry standards.

- Q: How does the HelpSteer2-Preference Reward Model enhance AI's responses?A: It combines probabilistic pairwise comparisons with regression analysis to provide more nuanced and human-preferred responses.